The future of advanced process control will be Artificial Intelligence Augmented Plants (AIAP): combining human expertise with AI to orchestrate complex plant operations and maximise process efficiency, quality control and plant uptime. In this article we look at older forms of artificial intelligence that have been used in the cement industry for decades, and how new approaches are coming of age exploiting advances in other industries with more advanced process control systems.

Advances in process control over the last 20 years have followed the same themes: robotics & automation, combined with Industrial Internet of Things (IIoT) for real time monitoring. Process control systems are now exploiting this combination of data fidelity, scope and speed to deliver automated and repeatable process optimisations at scale. The acceleration in the pace of the change of manufacturing systems was highlighted by a McKinsey report in 2021 that showed a 153% increase in US patents registered relating to industrial manufacturing processes, from 220,000 in 1981 – 2000 to 555,000 in 2001-2020.

Although the underlying themes are similar across industries, what we call ‘Advanced Process Control’ is starting to look increasingly different as sectors diverge. For example, three years ago the FMCG giant Mars Inc deployed a Microsoft Azure cloud-based IIoT Platform across all its 160 manufacturing plants worldwide. As well as using predictions to optimise process controls in the cloud, they are using the tool to hit sustainability targets such as waste usage and waste reductions. General Electric are deploying similar ‘digital twin’ type technologies for ‘in-life’ (constant over their operational lifetime) control across all their fields of activity, from renewable energy production, to the remote monitoring of jet engines.

Process engineering in these examples moves from an off-line historic “six-sigma” style data analysis project with written recommendations, to an on-line automated process optimisation using predictions and forecasts.

Process control in cement production has advanced along similar lines, with ‘Digital 4.0’ or IIoT programmes and wide sensor deployment, all delivering massive datasets which are being consolidated and stored. Distributed Control Systems (DCS) from ABB, FLSmidth, Mitsubishi and Siemens are widely deployed with central control rooms looking very similar from Europe to South America and the Middle East.

Where cement has differed is the ability to exploit the resulting massive datasets to drive process improvements.

Historically, the complexity of cement production was a challenge for data analysis and AI

Off-line historical analysis is constrained by the manpower required, and a limited number of trained process engineers across the cement industry has held back many promising optimisation programmes. Studies conducted in cement have additionally been constrained in their impact from the restricted ability to act on insight. For example, the natural variation in raw meal quality from a quarry, and variations in particle size and calorific value of waste-derived fuels are not easily controlled. Changes to one element of the cement production process also has unintended knock-on consequences elsewhere in terms of quality, emissions or process stability.

Moving from off-line to live process improvement automation in cement production has faced similar hurdles. Particularly for the high-temperature pyro process that is central to cement and governs efficiency and the carbon impact of the process. As summarised well by KIMA Process Controls in their October 2020 article for World Cement, moving from the 1990’s control technologies of Fuzzy Logic and Model Predictive Control to “Artificial Neural Networks (ANNs)” was not successful in the prior 10 years.

The challenge for cement has been the sheer number of non-linear, interrelated variables which affect clinker quality. This includes both input variables (raw meal quality and standard deviation, fuel quality moisture particle-size standard deviation cost, kiln coating levels, ambient weather factors, fuel mix, airflow etc) and output control factors (NOx emissions, SOx emissions, Free Lime levels, C3S levels, noise, stability, throughput, cost, blockage avoidance etc).

Even when the computational challenge of the number of interrelated variables can be managed through a mature AI system, two critical challenges remained as barriers.

Firstly, wear and natural variations in the key process components: the underlying process incrementally changes over time through usage, and in step-changes from each unplanned or planned shutdown as components are changed and modified.

Secondly, sensor drift and sensor failure e.g. the harsh cement operating conditions make achieving reliable sensor readings typically harder in cement production than other industries.

The impact of these two elements, combined with the sheer number of interrelated input and output variables meant that neural networks would have to be continually retrained to adjust to the plant and sensors.

The underlying maturity of AI technology behind neural networks made this impractical, given the time and effort required to train a neural network and deploy it into the production control system.

Enter ChatGPT and more widely Generative AI.

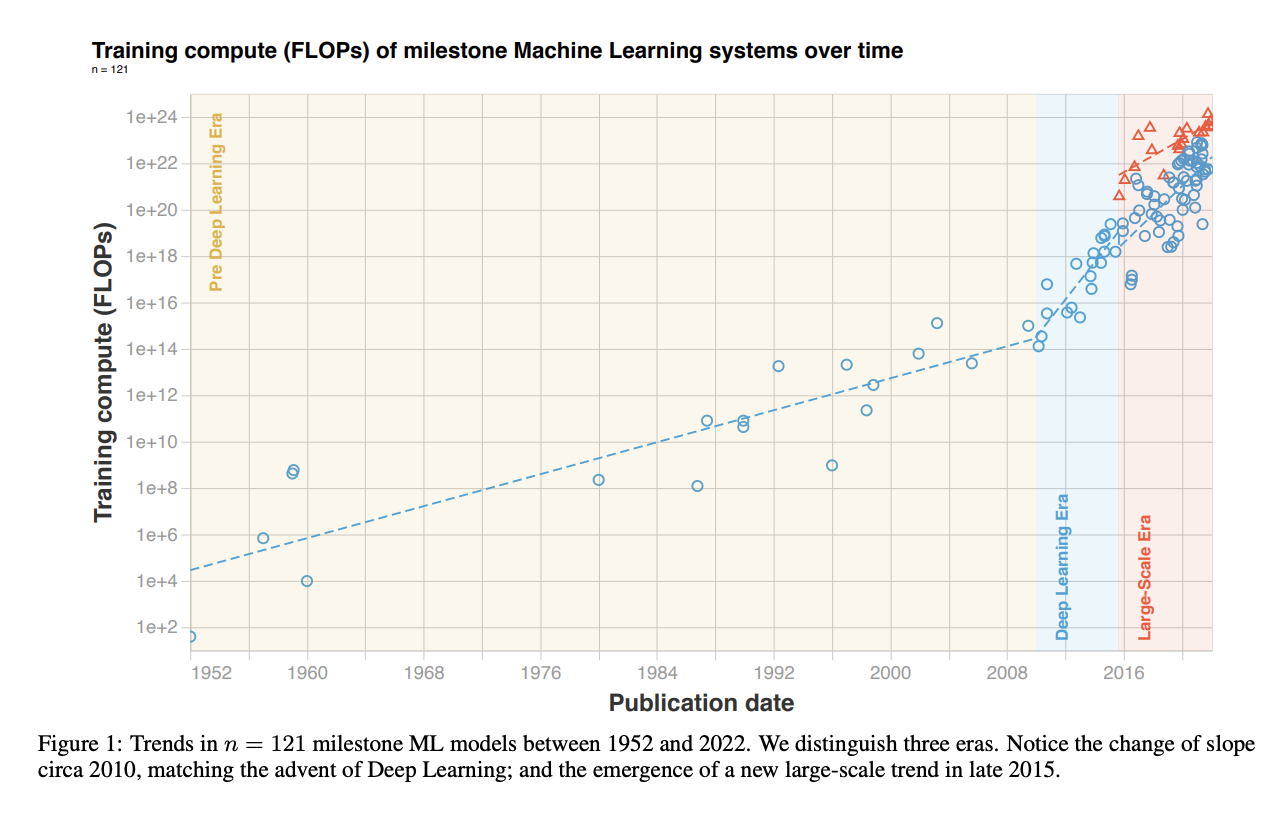

Machine Learning followed a consistent 18-month doubling time of computing power until 2010, when it accelerated in the ‘deep learning’ era. In late 2015, there was a step change with ‘large-scale models’ which started 2 to 3 orders of magnitude over the previous trend, powered by large computing budgets and wider Machine Learning expertise.

AI models for text and image are different from those needed for cement production.

Generative AI is typically working on a static dataset, rather than a continually evolving set of timestream data with interdependent variables. We do not see text from ChatGPT or images from Stable Diffusion impacting cement production in any direct way. What has changed is that over the last 10 years, the “picks and shovels” or ‘tools’ used for AI model generation have increased in capability, power, ease of use, sophistication and availability by many orders of magnitude.

Scaling Large Language Models used for Generative AI has created a tool set and process which enables fast and easy retraining and deployment. An example is “Weights & Biases”, used by companies from OpenAI the industry leaders, to BMW, Toyota, Spotify and Meta. It vastly reduces the time required for Machine Learning engineers to build models. How does this transfer to cement production?

To address the issues of plant process changes through wear and deal with sensor drift, machine learning (ML) models need to be constantly retrained and deployed as new data comes in from the cement plant.

Source: https://arxiv.org/pdf/2202.05924.pdf

Advancements in AI mean that models can now be continually updated to match the actual current situation at the cement plant.

This continual model development and deployment is at the heart of the software platform from Carbon Re which is currently live at cement plants in Europe and South America.

In Formula 1 racing, the in-race optimisation of the car controls racing around the track in Singapore or Monaco for McLaren is completed on servers back in the UK over the internet. This is because the machine learning models depend on specialised server architecture and software services that are only readily available on specialised remote servers provided by companies such as AWS and Microsoft: building an AI-capable data centre at each cement plant would be economically and practically infeasible. As such, the Carbon Re models are both developed and deployed remotely. Model outputs are sent to the local cement plant control room for implementation by the local control system.

With this remote based architecture, the Machine Learning models are not yet currently suited to provide the second-by-second stability needed at a cement plant (although this may come in time to match what is being done in Formula 1 racing). Instead, Machine Learning models work well with plants using a local High-Level Control system to provide second-by-second stability and optimisations. The local system will typically use Fuzzy Logic and Model Predictive Control type systems. The remote Machine Learning models currently work on a longer time horizon, over 15 minute periods up to hourly, adjusting process control parameters to optimise production according to the priorities defined by the local cement plant management.

The symbiotic relationship between the remote ML models and the local high-level control system goes both ways.

As well as providing higher level optimisations through setpoint recommendations, the ML models also make the local control system, such as an ABB Ability Expert Optimiser, more impactful.

In cement the large variable set with complex interrelationships gives a useful by-product whereby the ML models are able to reliably identify sensor failures. Fixing critical sensor inputs allows the local control system to operate more efficiently and regularly at each cement plant. Critical sensor inputs can be checked against other related indications to confirm accuracy. For example in the Carbon Re technology a Weighfeeder “software sensor” runs in parallel to the actual sensor. Not only does it detect a sensor failure, it also provides an interim measurement which can be used by the Expert System until the physical sensor is repaired at the next plant shutdown.

Another example of the symbiosis is how Machine Learning models are providing more detailed and regular data inputs to aid the local high-level control system. At most cement plants sampling of clinker quality for Free Lime and C3S is at hourly or two-hourly intervals. These readings are fed back into the Expert System to reduce underburning and overburning. Remote ML models help in three ways. Firstly they provide a prediction of the Free Lime and C3S between samples, according to the changes in other interrelated variables in the pre-calciner, kiln and cooler. Secondly they remove the 1 hour time-lag from taking a sample to feeding back the quality readings: removing the 1 hour lag allows the Expert System to react more quickly to changes in clinker quality. Finally, comparing the ML model output to the clinker sample output acts as a quality retest flag… ensuring the Expert System doesn’t react to poor sample data.

From our most recent deployment of the Carbon Re platform at a cement plant in South America, since our Free Lime predictions were made available, the weekly percentage of samples out of bounds has been 32% lower than the prior 3 month period: a reduction in underburning whilst not increasing overburning significantly. Given the performance of the Free Lime soft sensor, the data is now being fed directly into the Expert System with ‘closed loop’ control.

From our experience, some cement plants are missing critical data points required for this ML model derived optimisation. The most common for optimisation of the fuel efficiency of the kiln is related to sampling of the fuel as-fired. Due to fuel mix blending and important variations in fuel NCV and moisture content, if ML models are to find true fuel cost optimisations, they need fuel as-fired sampling regimes to increase from monthly to at least daily. With the actual frequency needed depending on the variability of fuel quality, and particularly for Alternative Fuels, the particle size.

Benefits from these advances in process control are not just cost reduction through fuel efficiency and carbon emissions reductions, although they deliver the clear and immediate payback from system implementation.

Additional benefits targeted by plants include avoiding blockages, reducing overburning to improve clinker grinding efficiency, and enhancing C3S in order to reduce the clinker factor. System wide changes become possible upstream and downstream of the high temperature pyroprocess.

ML models are exploiting the wider trends in both data and automation: providing forecasts that help stability and keep the Expert System on track, providing data to support the ES, and providing control room operators with setpoint recommendations. Once they have built trust in the new control systems, benefits for control room operators and process engineers will be advanced tools and more time to deliver better results.

And ML model based augmentations are not limited to the key high temperature pyroprocess, although this is the application which offers the most potential benefit. Additional elements being tackled include mining, raw meal grinding, cement grinding, electricity usage and cement mixing.The future of advanced process control will be Artificial Intelligence Augmented Plants (AIAP) across these process stages: combining human expertise with AI to orchestrate complex plant operations and maximise process efficiency, quality control and plant uptime.

This article was originally published in International Cement Review September Issue 2023.